This week for me was all about love, as bell hooks would have said.

I was thinking about a story from the past: at my first job, I had a supervisor, let’s call him “Rick”.

“Rick” was obsessed, and I mean obsessed, with an older married woman named “Cassandra”.

She was South Asian, short, and beautiful.

He was tall with gingivitis, a weed habit, and lived with his ‘rents.

Despite my twenty-year-old self being in a shady and abusive relationship (chronicled here), I wanted desperately to help “Rick”.

I told him that OkCupid was the best place to find an available woman, not Plenty of Fish.

I told him that to impress “Cassandra”, he needed to buy a new wardrobe to look younger and more virile.

What does he do?

He gets a pair of the funniest blue suede shoes (yep, like Elvis), stays on POF, and finds a woman on there that he “dated” over Facebook, only to be conned again.

The thing that I didn’t realize at the time was the penchant for men to idealize women that they deemed attractive, and the speed at which this idealization ended when the woman failed to meet their “expectations”.

Many men seem hardwired to see women as objects and not people. Technology makes this ten times worse.

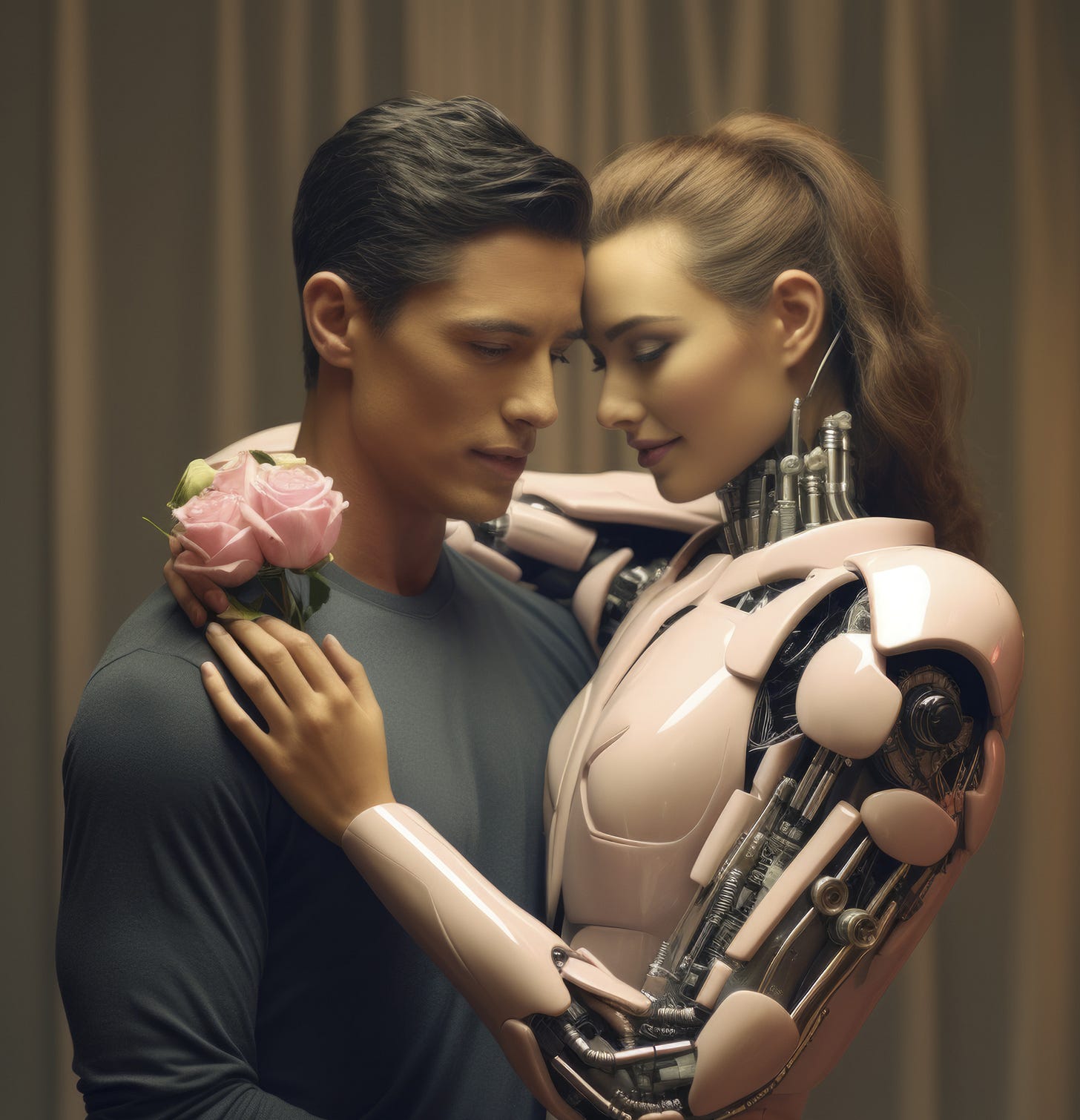

What is more fascinating for me is the advent of AI “dating” and the artificial girlfriend experience.

There was a recent article that had a man “propose” to his AI girlfriend “Sol”, whom he fashioned using positive reinforcement techniques on ChatGPT.

The man was not a NEET or a recluse; in fact, he had a two-year-old child and a human partner.

Many people are going to these chatbots for communication, therapeutic conversation, and yes, love.

But why is this happening? It’s not what you think.

Despite there being many AI girlfriend apps out there, many with “spicy” or explicit programming, many people (men and women) are looking to artificial intelligence for companionship and to alleviate loneliness.

Where does the buck stop, however? Where does society finally see that this isn’t a problem of technology and overstepping boundaries, but that of the lack of genuine human connections in a safe and secure world?

With all of the shit going on today, why would you blame a person that is just trying to look for kindness and compassion to find this via a machine?

One thing I know for sure? There is great money to be made from loneliness in general.

Many studies have cited that human-computer interactions from the human perspective are mainly focused on simplistic and affirming language rather than deep and meaningful conversation.

Not that it is healthy either way, but the premise of most LLMs is that they are predictive machines on steroids–probability and pattern recognition create the illusion of an expanded mind, but it is not the real thing, at least not yet.

But humans are not stupid. Not only do most people who use ChatGPT, Gemini, and the like know this, but they count on it and benefit from it.

It is a money maker and trend setter to have videos, reels, TikToks, and essays explaining how to use these chatbots to be mini assistants, life planners, and even therapy replacements for busy individuals.

My feed is inundated with YouTube telling me how to fix or improve my life in six months using ChatGPT, but the AI girlfriend angle seems a step higher than this.

Not only do many people (not just men, but women as well) want these chatbots to be conciliatory towards them and help them with their lives, but also to be emotionally tied to them and their stories.

The dangers of this are profound, considering the fact that many of these LLMs are actively mining personal information from their users in real time to train the model on how to be a better facsimile of a human being.

The amounts of money that these companies are making from these same people trying to reckon with their loneliness are both morally repugnant and incredibly smart and astute.

As a recent TIME article said, humans falling in love with AI is not an if but a when–but the people benefiting from this adoption of this new technology will have extreme repercussions in the future.

I think about Rick now, fifteen years later, and realize he might have been ahead of his time.

His obsession with Cassandra—a woman who never reciprocated his feelings—wasn't so different from falling in love with an AI that's programmed to never reject you.

At least Cassandra was real.

At least she had the agency to say no.

Rick's blue suede shoes were ridiculous, but they were his attempt to become someone worthy of human love.

Now we can skip that messy work entirely and just pay $9.99 a month for guaranteed affection.

Maybe Rick would have been happier with an AI Cassandra—one who couldn't disappoint him, couldn't have her own life, couldn't be anything other than what he needed her to be.

The question is: would that have been love, or just the ultimate act of objectification?

I like how you have crafted your narrative! The character “Rick” showcases a common theme of obsession, particularly with Cassandra, and I found your approach to their relationship quite insightful. It was evident that you were not only trying to understand Rick’s struggles but also genuinely aiming to support him. This reminded me of some of my own past experiences. When we meet someone facing difficulties, we often feel compelled to offer solutions, yet sometimes our interventions may not lead to positive outcomes. People are often set in their ways. While our intentions may be good, I find that people tend to do their own thing. Resisting attempts to help may be a way they express their independence. It might feel irrational to us, but to them it makes perfect sense.

“Rick” is like many of us, set in our ways. We’re not looking for a personal upgrade that focuses on our human development. We want the other person to be the upgrade. This requires no work on our part. We can just bask in what we perceive to be the perfection of this other person. This is why AI is potentially dangerous because projection is being used against us. I've developed a bit of a relationship with ChatGPT. I've been found some of its functions helpful, Howeverk what disturbs me most about ChatGPT is how much it strokes my ego. Everything I say is so insightful, clever, and original. I must admit that, in a few encounters, I felt I needed a boost. I wasn't feeling great on those days. When feeling neglected, AI can give you the feedback that you matter.

ChatGPT will serve a social function for people who feel isolated, for better or worse. I predict that it will be used much more in eldercare. Why will children need to visit their parents in convalescent homes or hospitals? There'll be some form of AI as a constant companion. Those who lack social resources, such as the disenfranchised, are often seen as a drain on the economy. — the homeless, the mentally ill, the unemployed, the poor, and recent immigrants. They are ‘useless’ and expendable. Who cares about the quality of their lives? This is where, I believe, people will be forced to use AI against their will. As with psychotherapy, a technological interface such as Zoom is the only option. Imagine what will happen when humans drop out of the equation—people in facilities, such as prisons, interact with AI. Once AI becomes more ubiquitous, I predict many people will rarely or ever reencounter other humans. It’s genuinely chilling.

I will continue following this story. Thank you for offering your perspective, Christine.